Rotating Proxies: Keep the Data Flowing

Scaping and various data collection is happening all the time. There is an exceptional amount of internet traffic that is not directly done by humans, instead the traffic is automated through bots. I wanted to share one method to assist with data collection.

I was working on a data collection project the other day and I found a perfect website that I could send data too and have it conduct work on the data and then I could scrape the results. However I ran into an issue that all scrapers will see at some point. Reaching an access cap to the site. You will know you have hit the wall because you will get a screen in your browser saying "Hey, you have reached your limit for today. We only allow blah blah blah attempts allowed..."

For my project I have over 1300+ unique pieces of data I want to conduct work on, my cap on the website was 10 connections a day. If I stick to this rule it will take forever.

A method I use and is quite popular among scrapers is to connect through proxies. I have built a proxy collection script that I import into new scraping scripts so that I can utilize the proxies that are grabbed from the imported script.

Below I will show you my method for importing a python script into another python script and using the transferred data in the new script. Using this method makes the rotating proxy so much easier.

(If you do some research you will see that there are many methods to achieve this goal [rotating proxies] however I have some specific reasons for this method and I will highlight it as we go ahead)

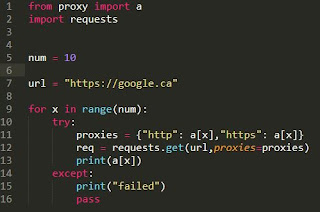

*I am going to walk through the above script line by line

*for reference the above script is referred to as call.py

*the above script is not my project, it is built to demonstrate rotating proxies

1-2: Importing requests module is critical for sending GET requests to websites.

You will see "from proxy import a" In the same folder as call.py I have a script called proxy.py, in side this script I scrape a free proxy list website and append all the proxies into a list called a.

In proxy.py I am retrieving only the https:// proxies

There are many methods for doing this, and you will even see some other scripts that grab the free proxies all built into one script. The reason I like doing it this way is that I have the entire proxy list loaded into memory and I am not sending out hundreds of requests to retrieve the next proxy on the list. As well python lists are very simple to work with.

3-8: setting variables num and url

9-16:(above image is for lines 9-16)

call.py is a very simplistic script to demonstrate the method. As you can see we are sending requests to google.ca.

I set an arbitrary for loop to cycle through 10 times.

I utilize try/except which is extremely useful for automatically testing items and raising exceptions if the attempt didn't work.

line 11 we are setting proxies and giving http and https the IP and Port that were retrieved from the proxy.py script

line 12 here we are actually sending the GET request and notice that we are attaching the modified proxy.

line 14-16 if the the requests does not work with the retrieved proxy I want the script to just keep going and try the next proxy IP/Port. I achieve this through using "pass." I have added "failed" for visuals in this demonstration, in a real scraping project I may or may not include this.

Additional notes:

You may have noticed that there were a bunch of failed attempts. I was ok with this, because 4/10 attempts worked. My retrieved https:// proxies was over 150. If I included http:// I would have had over 600.

An interesting piece of scraping you will notice is that the website you are scraping will often tell you they only allow "10" attempts a day, when I conducted my tests on this site I was able to successfully send at least 100 requests. So if we think about my 4 successful proxies out of 10, that would give me 400+ including my original IP. My total list is 1300 unique datasets (1300-500 = 800) I only need to use 8 or 9 more proxies to complete all my work!!

Be careful using free proxies. I was ok with it in this project because I don't care if owner of the free proxy observes my work. The retrieved data individually means really nothing when viewed on a granular level , when I correlate all the data it becomes information.

Reference: